Tuesday, November 15, 2016

College Majors with Fewer Women tend to have Larger Pay Gaps

Using the salaries that controlled for these factors from the Payscale report, I decided to do further analysis on the pay gap. I wanted to know how the percentage of women in a field effected the pay difference between men and women. Since many companies try to hire equal amounts of men and women for similar roles and there are significantly fewer women in science and engineering, I assumed these few women would be able to demand a higher salary because of the scarcity. However, I found the opposite.

The interactive graphic below shows that college majors with fewer women tend to have higher differences in pay. (The graphics may be difficult to see on mobile, please switch to a desktop or use the mobile-friendly version)

Two of the largest outliers are Nursing and Accounting. Nursing has 92.3% women and men still make about $2400 more on average. Accounting has 52.1% women and a pay difference of +$2400 for men. One point of interest is elementary education where women make 1.4% more than men.

The following graph shows the same results as above, but instead of absolute difference in dollars, the commonly used metric of women's salaries as a percentage of men's salaries is plotted.

Any guess as to why this occurs would largely be speculative, but I imagine a “boy’s club” mentality could be to blame where men like to hire men and are willing to even pay more to do so.

The links to the data sources can be found below and as always, add any suggestions in the comments.

Data Source:

[1] http://www.payscale.com/career-news/2009/12/do-men-or-women-choose-majors-to-maximize-income

[2] https://docs.google.com/spreadsheets/d/1FDrXUk4t-RQekuKotqMD7pyGFmylHip-xarOawcVvqk/edit#gid=0

I am a PhD student in Industrial Engineering at Penn State University. I did my undergrad at Iowa State in Industrial Engineering and Economics. My academic website can be found here.

Monday, October 31, 2016

A Look at Lynching in the United States

So I decided to do an analysis on lynching within the United States. I found data on lynching from the Tuskegee Institute [1-2]. This data set contains information on lynchings from 1882-1962. I tried to find lynching statistics from before 1882 and found, during slavery, lynching Africans was fairly uncommon due to slave owners having a vested interest in keeping the slave alive.

The first bit of information I found shocking was whites made up 27% of lynching victims. However, the percentage of whites vs. blacks changes wildly from state to state. To understand this the percentage of black victims were plotted by state. As can be assumed, the deep south had the highest percentage of blacks followed by the rest of the south.

However, a look at the total number of lynchings showed states in the deep south did not offend evenly. Mississippi, Georgia, and Texas had the most lynchings (581, 531, and 493 respectfully) and then there was a large drop off to Louisiana and Alabama at 391 and 347. New Hampshire had zero lynchings and Delaware, Maine, and Vermont had one. New York and New Jersey only had two.

Data Link and Notes:

[1] - http://law2.umkc.edu/faculty/projects/ftrials/shipp/lynchingsstate.html

[2] - http://law2.umkc.edu/Faculty/projects/ftrials/shipp/lynchingyear.html

I am a PhD student in Industrial Engineering at Penn State University. I did my undergrad at Iowa State in Industrial Engineering and Economics. My academic website can be found here.

Friday, October 14, 2016

Launching @BestOfData

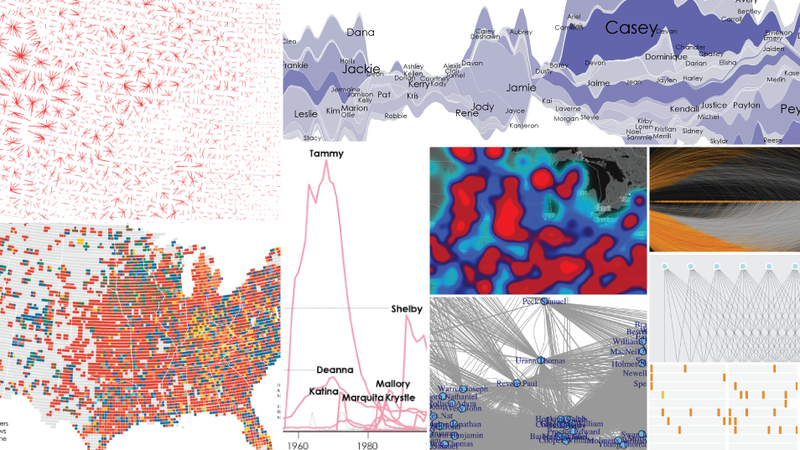

Since starting HallwayMathlete, many of my friends started having an interest in data visualization and all around data journalism. They have asked for suggestions on other websites to get top quality stories told through data analysis. The easiest answer is: FiveThirtyEight. However, there are many smaller blogs that turn out fantastic quality material, but in low volume. These smaller websites tend not to have Twitters, Facebook Pages, or other venues for fans to follow their most recent work.

For this reason, I am launching @BestOfData. Best of Data is an aggregation of the best smaller data journalism blogs on the web. Currently, Best of Data automatically pulls from a list of my favorite blogs and I will manual add other articles I find interesting.

The complete list of website automatically populating @BestOfData:

FlowingData.com

HowMuch.net

RandalOlson.com/Blog

Priceonomics.com

InformationIsBeautiful.net

ToddwSchneider.com

Insidesamegrain.com

Any websites you think I should add to this list? Please add them in the comments.

If you are not a Twitter user (you should really have a Twitter), the list of articles can be found at BestOfData.org.

I am a PhD student in Industrial Engineering at Penn State University. I did my undergrad at Iowa State in Industrial Engineering and Economics. My academic website can be found here.

Sunday, August 7, 2016

Which is the best Pokemon in Pokemon GO?

Below are interactive graphics that display the average max CP of each Pokemon by trainer level. The first graph is of only the top Pokemon in Pokemon GO. The final graph shows all Pokemon and the following graphs are grouped by type.

Tips:

- If you want to know which Pokemon a line represents, place your mouse on the line and the information will be displayed.

- To remove lines from the plot click the Pokemon's name in the legend.

- To see a graph in greater detail, click and drag over the region you wish to observe.

Best Pokemon:

1. Mewtwo

After trainer level 30 the CP limit for each Pokemon grows at a slower rate than previous levels.

- Bug

- Dragon

- Electric

- Fairy

- Fighting

- Fire

- Flying

- Ghost

- Grass

- Ground

- Ice

- Normal

- Poison

- Psychic

- Rock

- Steel

- Water

The data for the above plots comes from Serebii.net. In the following weeks, Hallway Mathlete will post a web-scraping tutorial showing how the data was gathered.

I am a PhD student in Industrial Engineering at Penn State University. I did my undergrad at Iowa State in Industrial Engineering and Economics. My academic website can be found here.

Sunday, July 3, 2016

Analyzing the Annual Republicans vs. Democrats Congressional Baseball Game

Below the net wins over the series is shown. The higher on the y axis the more Republican wins and the lower on the y axis the more Democrat wins. From this graph it is fairly obvious that each party has had long winning streaks. The gray dots represent years when the game was not held or I could not find any information about the game. In 1935, 1937, 1938, 1939, and 1941, games were held between members of congress and the press.

Ironically, RFK Stadium, named after the famous Democratic U.S. Senator, has given Republicans a strong home-field-advantage. Republicans have won 13 out of the 14 games played at the stadium. Democrats have seen similar success at Nationals Park; winning 7 out of the 9 games.

Notes:

- The data came from https://en.wikipedia.org/wiki/Congressional_Baseball_Game#Game_results

- Some of the stadiums were renamed over the years and the original data set contained both names. For the analysis, the same stadiums were combined with the most recent name.

I am a PhD student in Industrial Engineering at Penn State University. I did my undergrad at Iowa State in Industrial Engineering and Economics. My academic website can be found here.

Sunday, May 29, 2016

Salaries of Presidential Primary Voters by Candidate and State

The following plot shows the distribution of average salaries for each Presidential Candidate. Again we see similar trends as before with Kasich having a high average income and Clinton having a mix of low and high income supporters.

The last plot shows the relationship between the average salary of a state and the average salary of a candidate's supporters. The red line is a perfect 1 to 1 ratio and the closer a candidate is to the red line the closer the candidate's supporters are to having the same salary as the average person of that state. The reason for almost all the dots falling above the line is because people with below average salaries are less likely to vote.

If you have any suggestions for plots using this data, please share in the comment section.

Note:

[1] All states are not included because all states have not held elections yet.

I am a PhD student in Industrial Engineering at Penn State University. I did my undergrad at Iowa State in Industrial Engineering and Economics. My academic website can be found here.

Monday, May 2, 2016

Introduction to Machine Learning with Random Forest

Part 1. Getting Started

First step, we will load the package and iris data set. The data set contains 3 classes of 50 instances each, where each class refers to a type of iris plant. One class is linearly separable from the other 2; the latter are NOT linearly separable from each other.install.packages('randomForest') library(randomForest) data(iris) head(iris)

Part 2. Fit Model

Now that we know what our data set contains, let fit our first model. We will be fitting 500 trees in our forest and trying to classify the Species of each iris in the data set. For the randomForest() function, "~." means use all the variables in the data frame.Note: a common mistake, made by beginners, is trying to classify a categorical variable that R sees as a character. To fix this, convert the variable to a factor like this randomForest(as.factor(Species) ~ ., iris, ntree=500)

fit <- randomForest(Species ~ ., iris, ntree=500)

The next step is to use the newly create model in the fit variable and predict the label.

results <- predict(fit, iris) summary(results)

After you have the predicted labels in a vector (results), the predict and actual labels must be compared. This can be done with a confusion matrix. A confusion matrix is a table of the actual vs the predicted with the diagonal numbers being correctly classified elements while all others are incorrect.

Now we can take the diagonal points in the table and sum them, this will give us the total correctly classified instances. Then dividing this number by the total number of instances will calculate the percentage of prediction correctly classified. <- -="" 1="" accuracy="" correctly_classified="" div="" error="" iris="" length="" pecies="" results="" style="overflow: auto;" table="" total_classified="">

# Calculate the accuracy correctly_classified <- table(results, iris$Species)[1,1] + table(results, iris$Species)[2,2] + table(results, iris$Species)[3,3] total_classified <- length(results) # Accuracy correctly_classified / total_classified # Error 1 - (correctly_classified / total_classified)

Part 3. Validate Model

# How to split into a training set rows <- nrow(iris) col_count <- c(1:rows) Row_ID <- sample(col_count, rows, replace = FALSE) iris$Row_ID <- Row_ID # Choose the percent of the data to be used in the training data training_set_size = .80 #Now to split the data into training and test index_percentile <- rows*training_set_size # If the Row ID is smaller then the index percentile, it will be assigned into the training set train <- iris[iris$Row_ID <= index_percentile,] # If the Row ID is larger then the index percentile, it will be assigned into the training set test <- iris[iris$Row_ID > index_percentile,] train_data_rows <- nrow(train) test_data_rows <- nrow(test) total_data_rows <- (nrow(train)+nrow(test)) train_data_rows / total_data_rows # Now we have 80% of the data in the training set test_data_rows / total_data_rows # Now we have 20% of the data in the training set # Now lets build the randomforest using the train data set fit <- randomForest(Species ~ ., train, ntree=500)

# Use the new model to predict the test set results <- predict(fit, test, type="response") # Confusion Matrix table(results, test$Species) # Calculate the accuracy correctly_classified <- table(results, test$Species)[1,1] + table(results, test$Species)[2,2] + table(results, test$Species)[3,3] total_classified <- length(results) # Accuracy correctly_classified / total_classified # Error 1 - (correctly_classified / total_classified)

Part 4. Model Analysis

After the model is created, understanding the relationship between variables and number of trees is important. R makes it easy to plot the errors of the model as the number of trees increase. This allows users to trade off between more trees and accuracy or fewer trees and lower computational time.fit <- randomForest(Species ~ ., train, ntree=500) results <- predict(fit, test, type="response") # Rank the input variables based on their effectiveness as predictors varImpPlot(fit) # To understand the error rate lets plot the model's error as the number of trees increases plot(fit)

Part 5. Handling Missing Values

The last section of this tutorial involves one of the most time consuming and important parts of the data analysis process, missing variables. Very few machine learning algorithms can handle missing data in the data. However the randomForest package contains one of the most useful functions of all time, na.roughfix(). Na.roughfix() takes the most common factor in that column and replaces all the NAs with it. For this section we will first create some NAs in this data set and then replace them and run the prediction algorithm.# Create some NA in the data. iris.na <- iris for (i in 1:4) iris.na[sample(150, sample(20)), i] <- NA # Now we have a dataframe with NAs View(iris.na) #Adding na.action=na.roughfix #For numeric variables, NAs are replaced with column medians. #For factor variables, NAs are replaced with the most frequent levels (breaking ties at random)

iris.narf <- randomForest(Species ~ ., iris.na, na.action=na.roughfix)

results <- predict(iris.narf, train, type="response")

I am a PhD student in Industrial Engineering at Penn State University. I did my undergrad at Iowa State in Industrial Engineering and Economics. My academic website can be found here.